Current state: ["Under Discussion"]

ISSUE: #17599

PRs:

Keywords: search, range search, radius, low bound, high bound

Released:

Summary(required)

By now, doing "search" in Milvus will return TOPK most similar results for each vector being searched.

This MEP is about to realize a functionality named "range search". User specifies a range scope -- including radius low bound and radius high bound, Milvus does "range search", and also returns TOPK sorted results with distance falling in this range scope.

- both radius low bound and radius high bound MUST be set

- range search will return TOPK results with

- for L2: low_bound <= distance < high_bound

- for IP: low_bound < distance <= high_bound

Motivation(required)

Many users request Milvus to realize a "range search" functionality. With this capability, user can

- get results with distance falling in a range scope

- get search results more than 16384

Public Interfaces(optional)

- No interface change in Milvus and all SDKs

We reuse the SDK interface Search() to do "range search". Only add 2 more parameters "radius_low_bound" and "radius_high_bound" into params.

When "radius_low_bound" and "radius_high_bound" are specified, Milvus does range search; otherwise, Milvus does search.

As shown in the following figure, set "radius_low_bound": 1.0, "radius_high_bound": 2.0 in search_ params.params.

default_index = {"index_type": "HNSW", "params":{"M": 48, "efConstruction": 500}, "metric_type": "L2"}

collection.create_index("float_vector", default_index)

collection.load()

search_params = {"metric_type": "L2", "params": {"ef": 32, "radius_low_bound": 1.0, "radius_high_bound": 2.0}}

res = collection.search(vectors[:nq], "float_vector", search_params, limit, "int64 >= 0")

- 3 new interfaces in knowhere

// range search parameter legacy check virtual bool CheckRangeSearch(Config& cfg, const IndexType type, const IndexMode mode); // range search virtual DatasetPtr QueryByRange(const DatasetPtr& dataset, const Config& config, const faiss::BitsetView bitset); // brute force range search static DatasetPtr BruteForce::RangeSearch(const DatasetPtr base_dataset, const DatasetPtr query_dataset, const Config& config, const faiss::BitsetView bitset);

Design Details(required)

Knowhere

We add 3 new APIs CheckRangeSearch(), QueryByRange() and BruteForce::RangeSearch() to support range search, these APIs are already available since knowhere-v1.3.0.

- QueryByRange()

This API is used to get all unsorted results with distance "better than radius" (for L2: < radius; for IP: > radius).

| PROTO | DatasetPtr QueryByRange(const DatasetPtr&, const Config&, const faiss::BitsetView); |

INPUT | Dataset { Config { knowhere::meta::RADIUS: - // radius for range search } |

OUTPUT | Dataset { |

LIMS is with length "nq+1", it's the offset prefix sum for result IDS and result DISTANSES. The length of IDS and DISTANCES are the same but variable.

Suppose N queried vectors is with label: {0, 1, 2, ..., n-1}

The result counts for each queried vectors are: {r(0), r(1), r(2), ..., r(n-1)}

Then the data in LIMS will be like this: {0, r(0), r(0)+r(1), r(0)+r(1)+r(2), ..., r(0)+r(1)+r(2)+...+r(n-1)}

The total range search result num is: LIMS[nq]

The range search result for each query vector is: IDS[lims[n], lims[n+1]) and DISTANCE[lims[n], lims[n+1])

The memory used for IDS, DISTANCE and LIMS are allocated in Knowhere, they will be auto-freed when Dataset deconstruction.

By new, following index types support QueryByRange():

BinaryIDMAP

BinaryIVF

IDMAP

IVF_FLAT

IVF_PQ

IVF_SQ8

HNSW

Milvus

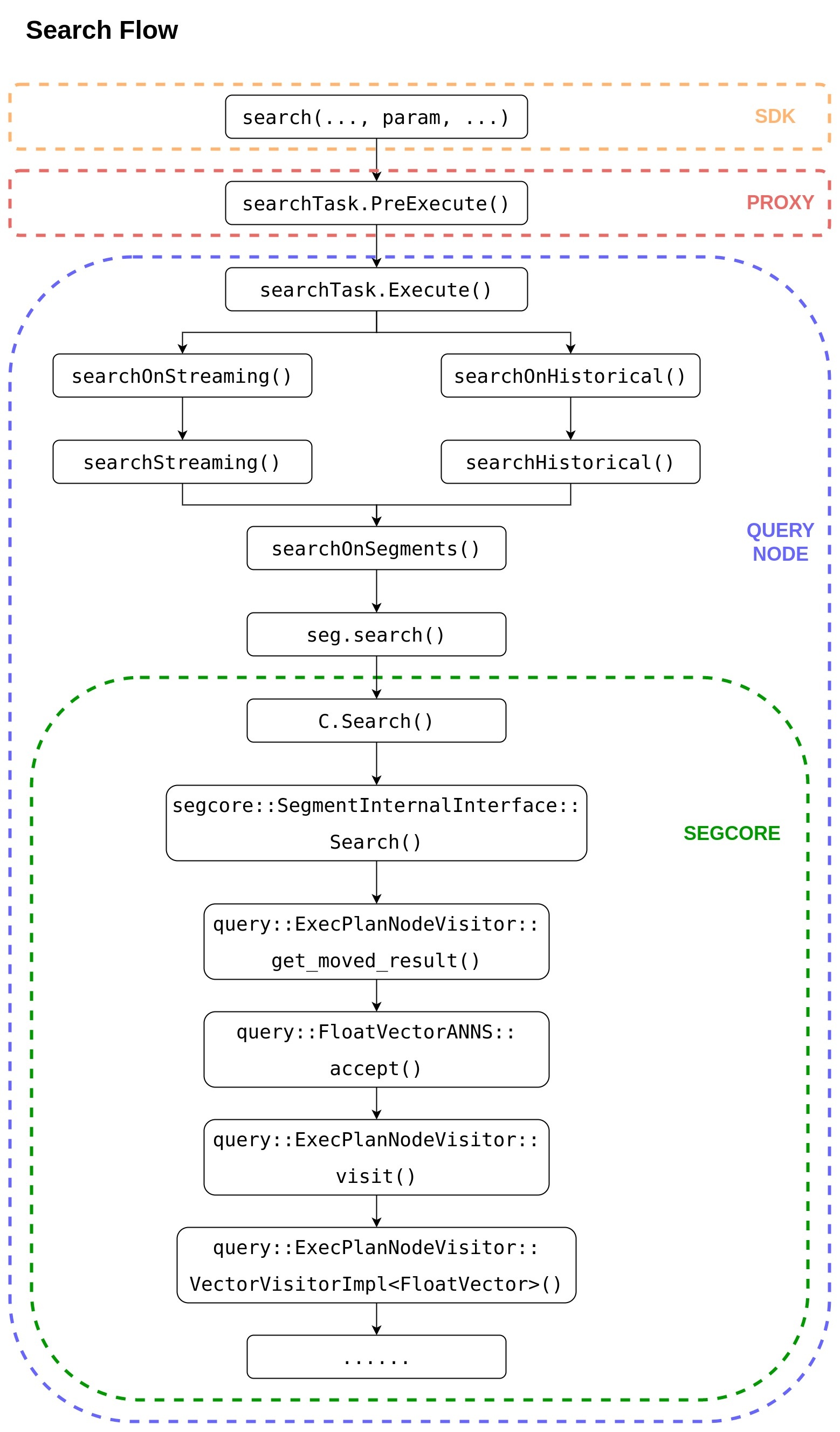

Range search completely reuses the call stack from SDK to segcore.

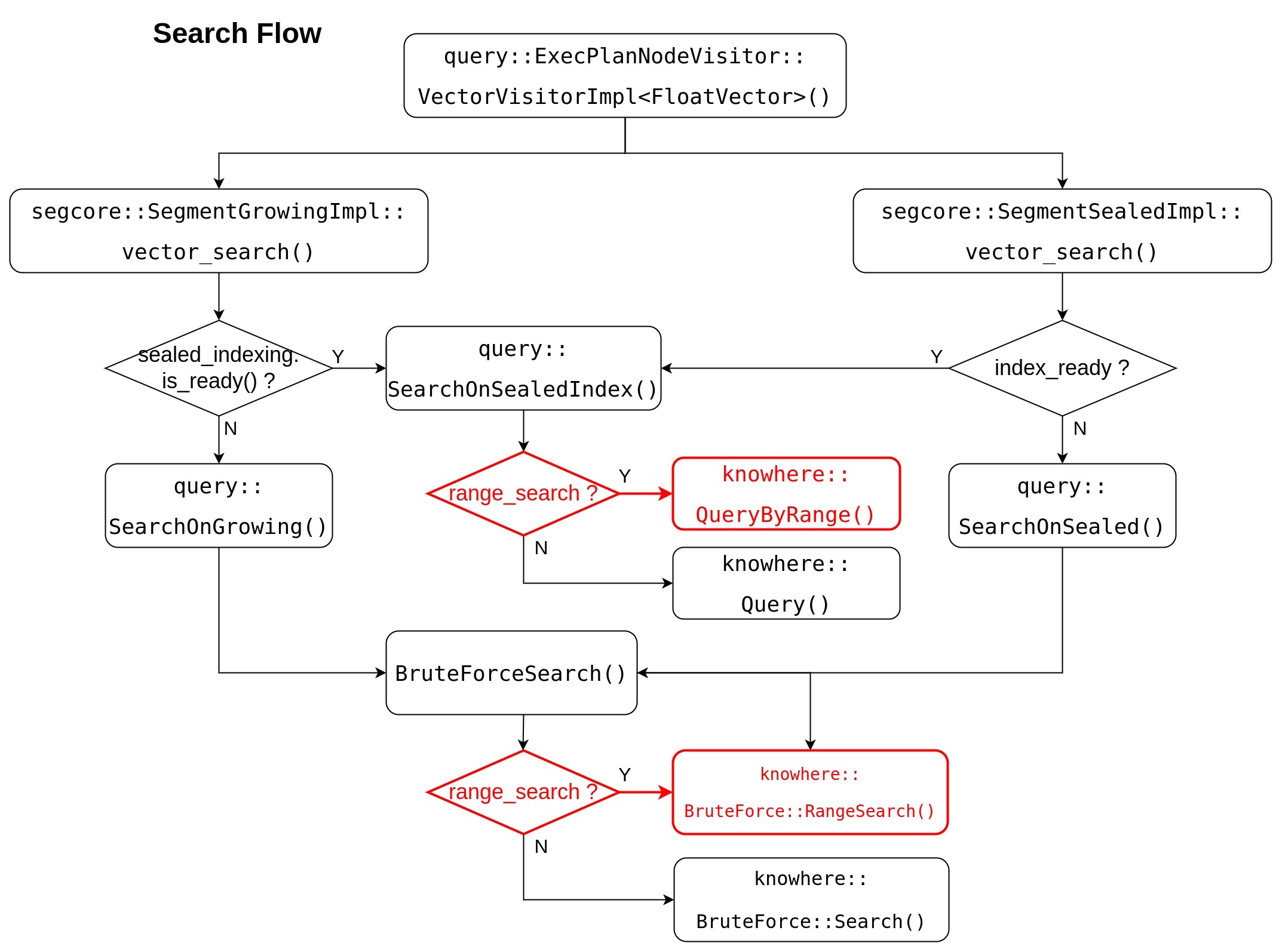

Segcore search flow will be updated as this flow chart, range search related change is marked RED.

In API query::SearchOnSealedIndex() and BruteForceSearch(), when "radius_low_bound" and "radius_high_bound" are set, range search is called; otherwise, search is called.

For API query::SearchOnSealedIndex(), the result returned by knowhere::QueryByRange() will be sorted and filtered, only results with distance falling in the range scope will be returned.

The same as API BruteForceSearch(), the result returned by knowhere::BruteForce::RangeSearch() will be sorted and filtered, only results with distance falling in the range scope will be returned.

The output of range search is exactly as same as the result of search.

- seg_offsets_: with length "nq * topk", -1 is filled in when no enough result data

- distances_: with lengh "nq * topk", data is undefined when no enough result data

Compatibility, Deprecation, and Migration Plan(optional)

There is no compatibility issue.

Test Plan(required)

For Knowhere

- Add new unittest

- Add benchmark using range search dataset

There is no public data set for range search. I have created range search data set based on sift1M and glove200.

You can find them in NAS:

test/milvus/ann_hdf5/sift-128-euclidean-range.hdf5

test/milvus/ann_hdf5/glove-200-angular-range.hdf5

test/milvus/ann_hdf5/binary/sift-4096-hamming-range.hdf5

For Milvus

- add unittest in segcore

- use sift1M/glove200 dataset to test range search, we expect to get identical result as search

Rejected Alternatives(optional)

The previous proposal of this MEP is let range search return all results with distances better than a "radius".

The project implementation of the previous proposal will be much more complicated than current proposal.

Cons:

- Need implement Merge operation for chunk, segment, query node and proxy

- Memory explosion caused by Merge

- Many API modification caused by invalid topk parameter

Current proposal is better than the previous one in all respects.