...

Note: the "state" could be "pending", "started", "downloaded", "parsed", "persisted", "completed"completed, "failed"

Pre-defined format for import files

...

| Code Block |

|---|

{

"rows":[

{"uid": 101, "vector": [1.1, 1.2, 1.3, 1.4]},

{"uid": 102, "vector": [2.1, 2.2, 2.3, 2.4]},

{"uid": 103, "vector": [3.1, 3.2, 3.3, 3.4]},

{"uid": 104, "vector": [4.1, 4.2, 4.3, 4.4]},

{"uid": 105, "vector": [5.1, 5.2, 5.3, 5.4]},

]

} |

Call import() to import the file:

...

| Code Block |

|---|

import(collection_name="test", row_based=false, files=["file_1.json", "vector.npy"]) |

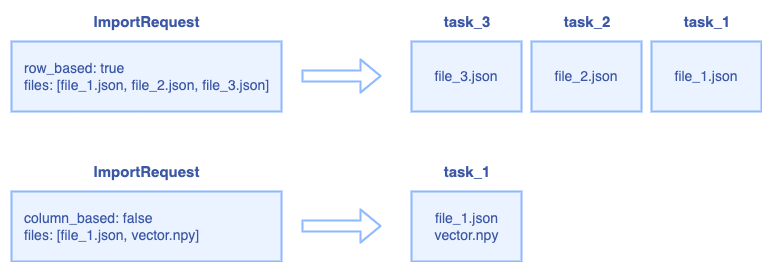

Note: for column-based, we don't support multiple json files, all columns should be stored in one json file. If user use a numpy file to store vector field, then other scalar fileds should be stored in one json file.

Error handling

The Import():

- Return error "Collection doesn't exist" if the target collection doesn't exist

- Return error "Partition doesn't exist" if the target partition doesn't exist

- Return error "Bucket doesn't exist" if the target bucket doesn't exist

- Return error "File list is empty" if the files list is emptyAll fields

- ImportTask pending list has limit size, if a new import request exceed the limit size, return error "Import task queue max size is xxx, currently there are xx pending tasks. Not able to execute this request with x tasks."

The get_import_state():

- Return error "File xxx doesn't exist" if could not open the file.

- All fields must be presented, otherwise, return the error "The field xxx is not provided"

- For row-based json files, return "not a valid row-based json format, the key rows not found" if could not find the "rows" node must be presented, otherwise, return the error "The field xxx is not provided"

- For column-based files, if a vector field is duplicated in numpy file and json file, return the error "The field xxx is duplicated

The get_import_state():

- Return error "File xxx doesn't exist" if could not open the file. json parse error: xxxxx" if encounter illegal json format

- The row count of each field must be equal, otherwise, return the error "Inconsistent row count between field xxx and xxx". (all segments generated by this file will be abandoned)

- If a vector dimension doesn't equal to field schema, return the error "Incorrect vector dimension for field xxx". (all segments generated by this file will be abandoned)

- If a data file size exceed 1GB, return error "Data file size must be less than 1GB"

- If an import task is no response for more than 6 hours, it will be marked as failed

- If datanode is crashed or restarted, the import task on it will be marked as failed

...

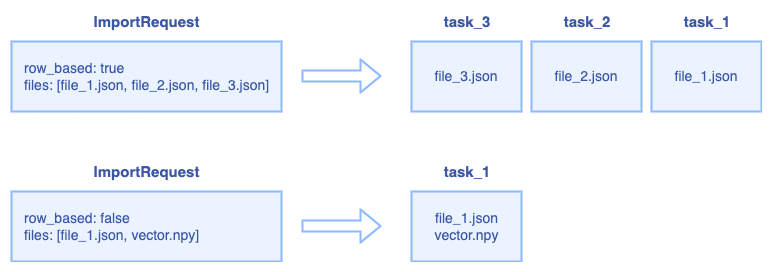

For column-based request, all files will be regarded as one ImportTask.

5. Datanode interfaces

...