Current state: ["Under DiscussionApproved"]

ISSUE: #17599

PRs:

Keywords: search, range search, radius, range filter

Released:

Summary(required)

At presentBy now, the behavior of of "search" in Milvus is to return returning TOPK most similar sorted results for each queried vector to be queried.

The purpose of this This MEP is about to realize a functionality named "range search" function. The user User specifies a query "radiusrange scope -- including radius and range filter (optional), Milvus does "range search", and Milvus queries and returns all also returns TOPK sorted results with distance better than this "radius" ("< radius" for "L2"; "> radius" for "IP")

This function can be regarded as a "superset" of the existing query function, because if sort the results of range search and take the first `topk` results, we get identical results comparing with current query function.

The result output of this MEP is different from the original query result. The original query result is with fixed length `nq * topk`, while the return result of range search is variable length. In addition to `IDs` / `distances`, `lims` is also returned to record the offset of the query result of each vector in the result set. Another MEP pagination will uniformly process the results of `Query` and `QueryByRange` and return them to the client, so the processing of the returned results is not within the scope of this MEP discussion.

Motivation(required)

...

falling in this range scope.

- param "radius" MUST be set, param "range filter" is optional

- falling in range scope means

| Metric type | Behavior |

|---|---|

| IP | radius < distance <= range_filter |

| L2 and other metric types | range_filter <= distance < radius |

Motivation(required)

Many users request the "range search" functionality. With this capability, user can

- get results with distance falling in a range scope

- get search results more than 16384

Public Interfaces(optional)

- No interface change in Milvus and all SDKs

We reuse the SDK interface `QuerySearch()` to realize the function of range search, so the interface of Milvus and all SDKs need not to be changed. We only need add "radius" information to params. When to do "range search". Only add 2 more parameters "radius" and "range_filter" into params.

When param "radius" is specified, the "limit" setting is ignoredMilvus does range search; otherwise, Milvus does search.

As shown in the following figure, set "range_filter": 1.0, "radius": 888" 2.0 in search_ params.params.

| Code Block | ||

|---|---|---|

| ||

default_index = {"index_type": "HNSW", "params":{"M": 48, "efConstruction": 500}, "metric_type": "L2"}

collection.create_index("float_vector", default_index)

collection.load()

search_params = {"metric_type": "L2", "params": {"ef": 32, "range_filter": 1.0, "radius": 8882.0}}

res = collection.search(vectors[:nq], "float_vector", search_params, limit, "int64 >= 0") |

- Need add new interface `QueryByRange()` 3 new interfaces in knowhere

| Code Block | ||

|---|---|---|

| ||

// range search parameter legacy check virtual bool CheckRangeSearch(Config& cfg, const IndexType type, const IndexMode mode); // range search virtual DatasetPtr QueryByRange(const DatasetPtr& dataset, const Config& config, const faiss::BitsetView bitset); |

There is one proposal that not to add new interface QueryByRange(), but reusing interface Query() for range search to minimize Knowhere's public interface.

Considering the implementation in Knowhere, we prefer not to adopt this proposal, because not all types of index support range search.

By now, Knowhere totally can support 13 types of index, but only 8 of them can support range search (the cell filled with BLUE):

...

If add new interface QueryByRange(), we can add a virtual QueryByRange() into "VecIndex.h". If QueryByRange() is called for an index type which does not support range search, a knowhere exception will be thrown out.

...

| language | cpp |

|---|

...

// brute force range search

static DatasetPtr

BruteForce::RangeSearch(const DatasetPtr base_dataset, const DatasetPtr query_dataset, const Config& config, const faiss::BitsetView bitset); |

Design Details(required)

Knowhere

Index types and metric types supporting range search are listed below:

| IP | L2 | HAMMING | JACCARD | TANIMOTO | SUBSTRUCTURE | SUPERSTRUCTURE | |

|---|---|---|---|---|---|---|---|

| BIN_IDMAP | |||||||

| BIN_IVF_FLAT | |||||||

| IDMAP | |||||||

| IVF_FLAT | |||||||

| IVF_PQ | |||||||

| IVF_SQ8 | |||||||

| HNSW | |||||||

| ANNOY | |||||||

| DISKANN |

If call range search API with unsupported index types or unsupported metric types, exception will be thrown out.

In Knowhere, two new parameters "radius" and "range_filter" are passed in via config, and range search will return all un-sorted results with distance falling in this range scope.

| Metric type | Behavior |

|---|---|

| IP | radius < distance <= range_filter |

| L2 or other metric types | range_filter <= distance < radius |

Knowhere run range search in 2 steps:

- pass param "radius" to thirdparty to call their range search APIs, and get result

- if param "range_filter " is set, filter above result and return; if not, return above result directly

We add 3 new APIs CheckRangeSearch(), QueryByRange() and BruteForce::RangeSearch() to support range search.

- CheckRangeSearch()

This API is used to do range search parameter legacy check. It will throw exception when parameter legacy check fail.

The valid scope for "radius" is defined as: -1.0 <= radius <= float_max

| metric type | range | similar | not similar |

|---|---|---|---|

| L2 | [0, inf) | 0 | inf |

| IP | [-1, 1] | 1 | -1 |

| jaccard | [0, 1] | 0 | 1 |

| tanimoto | [0, inf) | 0 | inf |

| hamming | [0, n] | 0 | n |

2. QueryByRange()

This API returns all unsorted results with distance falling in the specified range scope.

| PROTO | virtual DatasetPtr |

...

But if we reuse interface Query() for range search, then the implementation of Query() for all index types will be changed like this:

...

| language | cpp |

|---|

...

INPUT | Dataset { Config { knowhere::meta::RADIUS: - knowhere::meta::RANGE_FILTER: - } |

OUTPUT | Dataset { |

LIMS is with length "nq+1", it's the offset prefix sum for result IDS and result DISTANSE. The length of IDS and DISTANCE are the same but variable.

Suppose N queried vectors are with label: {0, 1, 2, ..., n-1}

The result counts for each queried vectors are: {r(0), r(1), r(2), ..., r(n-1)}

Then the data in LIMS will be like this: {0, r(0), r(0)+r(1), r(0)+r(1)+r(2), ..., r(0)+r(1)+r(2)+...+r(n-1)}

The total range search result num is: LIMS[nq]

The range search result for each query vector is: IDS[lims[n], lims[n+1]) and DISTANCE[lims[n], lims[n+1])

The memory used for IDS, DISTANCE and LIMS are allocated in Knowhere, they will be auto-freed when Dataset deconstruction.

3. BruteForce::RangeSearch()

This API does range search for no-index dataset, it returns all unsorted results with distance "better than radius" (for IP: > radius; for others: < radius).

| PROTO | static DatasetPtr |

...

|

...

const |

...

Config& config, |

...

|

...

|

...

const faiss::BitsetView |

...

bitset); | |

INPUT | Dataset { Dataset { Config { knowhere::meta::RADIUS: - knowhere::meta::RANGE_FILTER: - } |

OUTPUT | Dataset { |

The output is as same as QueryByRange().

Segcore

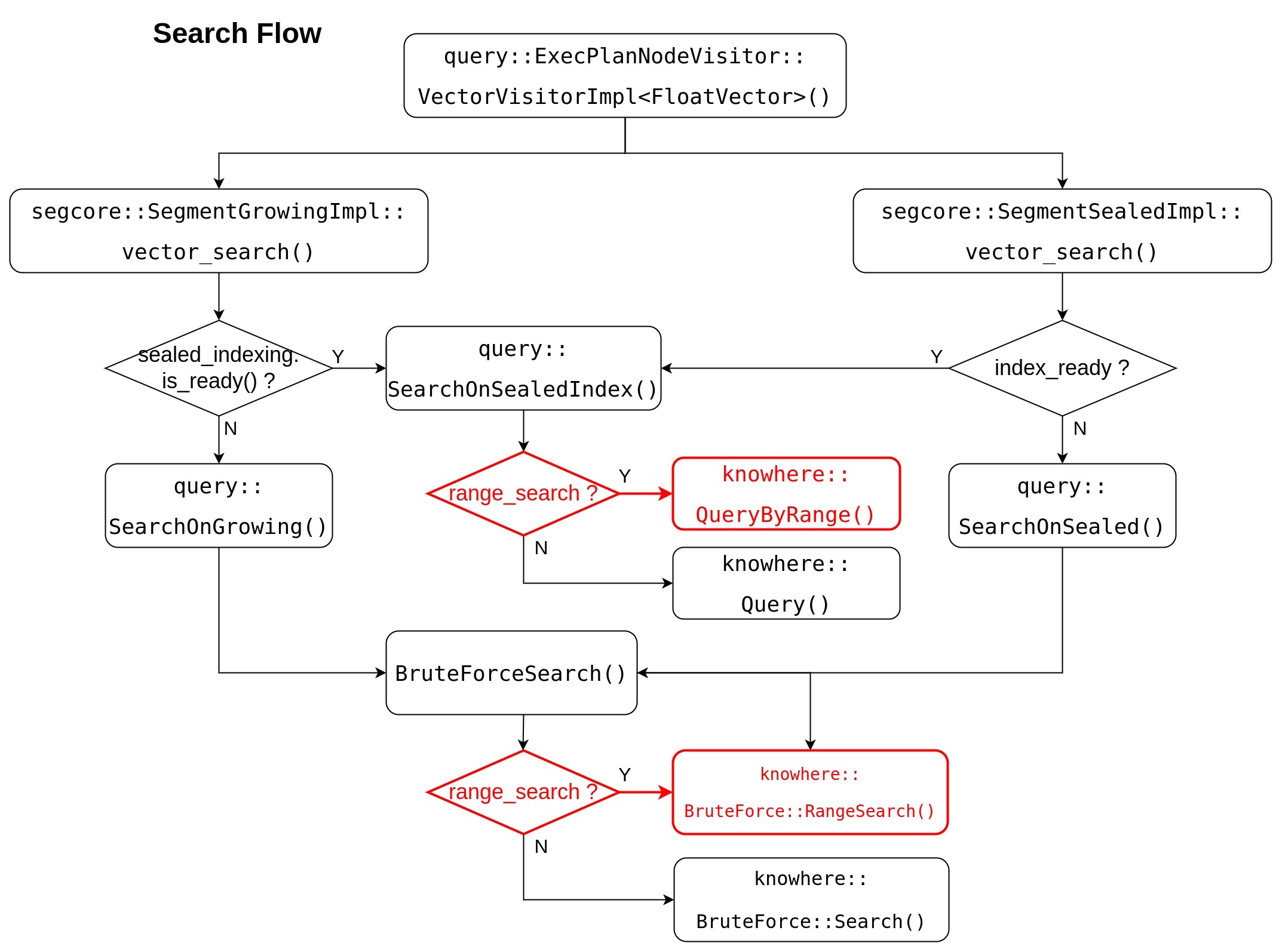

Segcore search flow will be updated as this flow chart, range search related change is marked RED.

Segcore uses radius parameter's existence to decide whether to run search, or to run range search.

- when param "radius" is set, range search is called;

- otherwise, search is called.

For API query::SearchOnSealedIndex() and BruteForceSearch(), they do like following:

- pass radius parameters (radius / range_filter) to knowhere

- get all unsorted range search result from knowhere

- for each NQ's results, do heap-sort

- return result Dataset with TOPK results

Whatever do range search or search, the output structure are same:

- query::SearchOnSealedIndex() => SearchResult

- BruteForceSearch() => SubSearchResult

Both SearchResult and SubSearchResult contain TOPK sorted result for each NQ.

- seg_offsets_: with length "nq * topk", -1 is filled in when no enough result data

- distances_: with lengh "nq * topk", data is undefined when no enough result data

Milvus

Range search completely reuses the call stack from SDK to segcore.

How to get more than 16384 range search results ?

With this solution, user can get maximum 16384 range search results in one call.

If user wants to get more than 16384 range search results, they can call range search multiple times with different range_filter parameter (use L2 as an example)

- NQ = 1

1st call with (range_filter = 0.0, radius = inf), get result distances like this:

{d(0), d(1), d(2), ..., d(n-1)}

2nd call with (range_filter = d(n-1), radius = inf), get result distances like this:

{d(n), d(n+1), d(n+2), ..., d(2n-1)}

3rd call with (range_filter = d(2n-1), radius = inf), get result distances like this:

{d(2n), d(2n+1), d(2n+2), ..., d(3n-1)}

...

- NQ > 1 (suppose NQ = 2)

1st call with (range_filter = 0.0, radius = inf), get result distances like this:

{d(0,0), d(0,1), d(0,2), ..., d(0,n-1), d(1,0), d(1,1), d(1,2), ..., d(1,n-1)}

2nd call with (range_filter = min{d(0,n-1), d(1,n-1)}, radius = inf), get result distances like this:

{d(0,n), d(0,n+1), d(0,n+2), ..., d(0,2n-1), d(1,n), d(1,n+1), d(1,n+2), ..., d(1,2n-1)}

3rd call with (range_filter = min{d(0,2n-1), d(1,2n-1)}, radius = inf), get result distances like this:

{d(0,2n), d(0,2n+1), d(0,2n+2), ..., d(0,3n-1), d(1,2n), d(1,2n+1), d(1,2n+2), ..., d(1,3n-1)}

...

The result of each iteration will have some duplication with the result of previous iteration, user need do duplication check and remove them.

Compatibility, Deprecation, and Migration Plan(optional)

This is a new functionality, there is no compatibility issue.

Test Plan(required)

Knowhere

- Add new unittest

- Add benchmark to test range search runtime and recall

- Add benchmark to test range search QPS

There is no public dataset for range search. I have created range search data set based on sift1M and glove200

This is not a good code design for Knowhere.

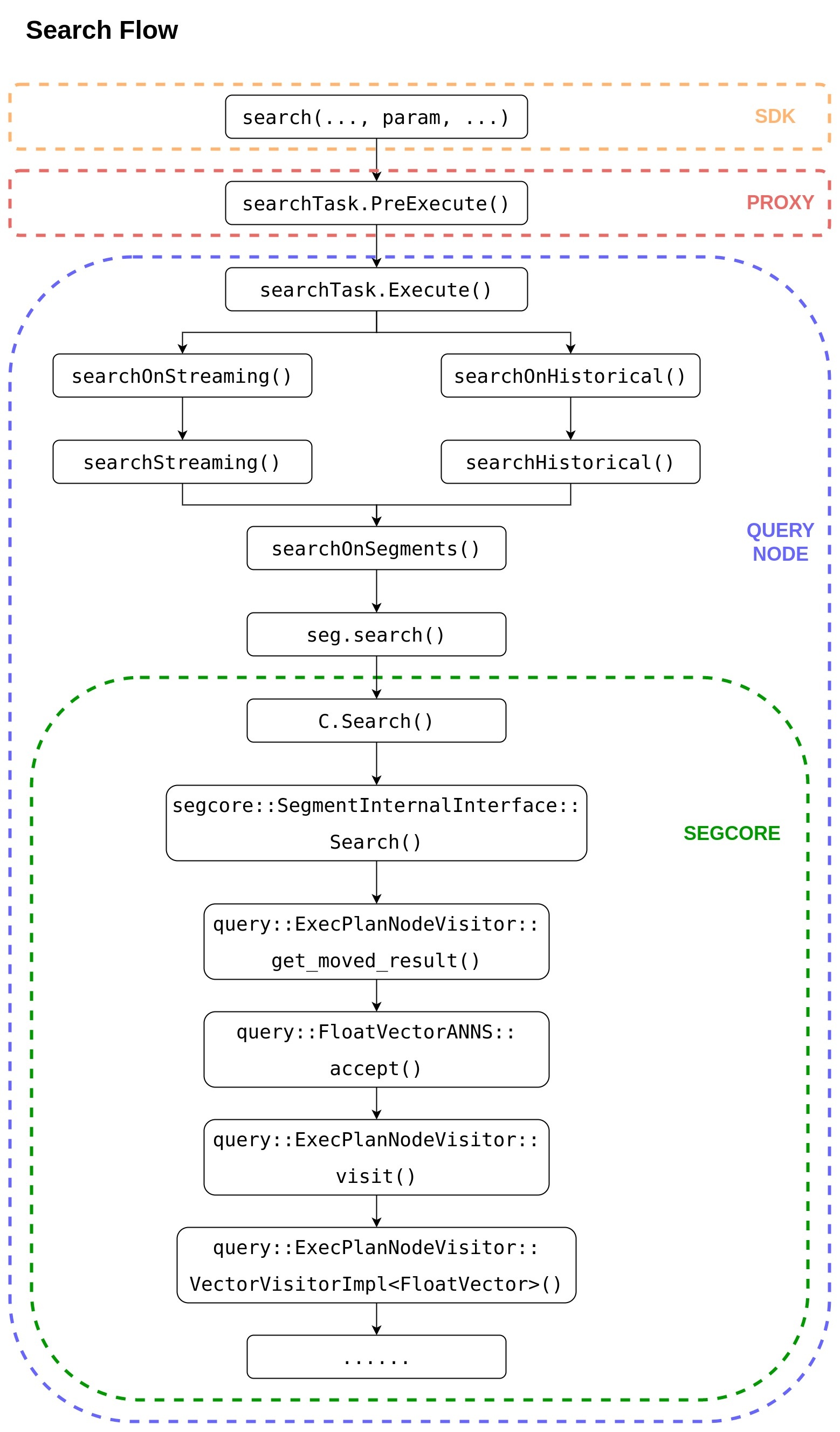

Design Details(required)

The following figure shows the complete call stack of a search request from SDK to segcore. Range search completely reuses this call stack without any changes. Range search only needs to put the parameter "radius" into the query parameter "param" on the SDK side.

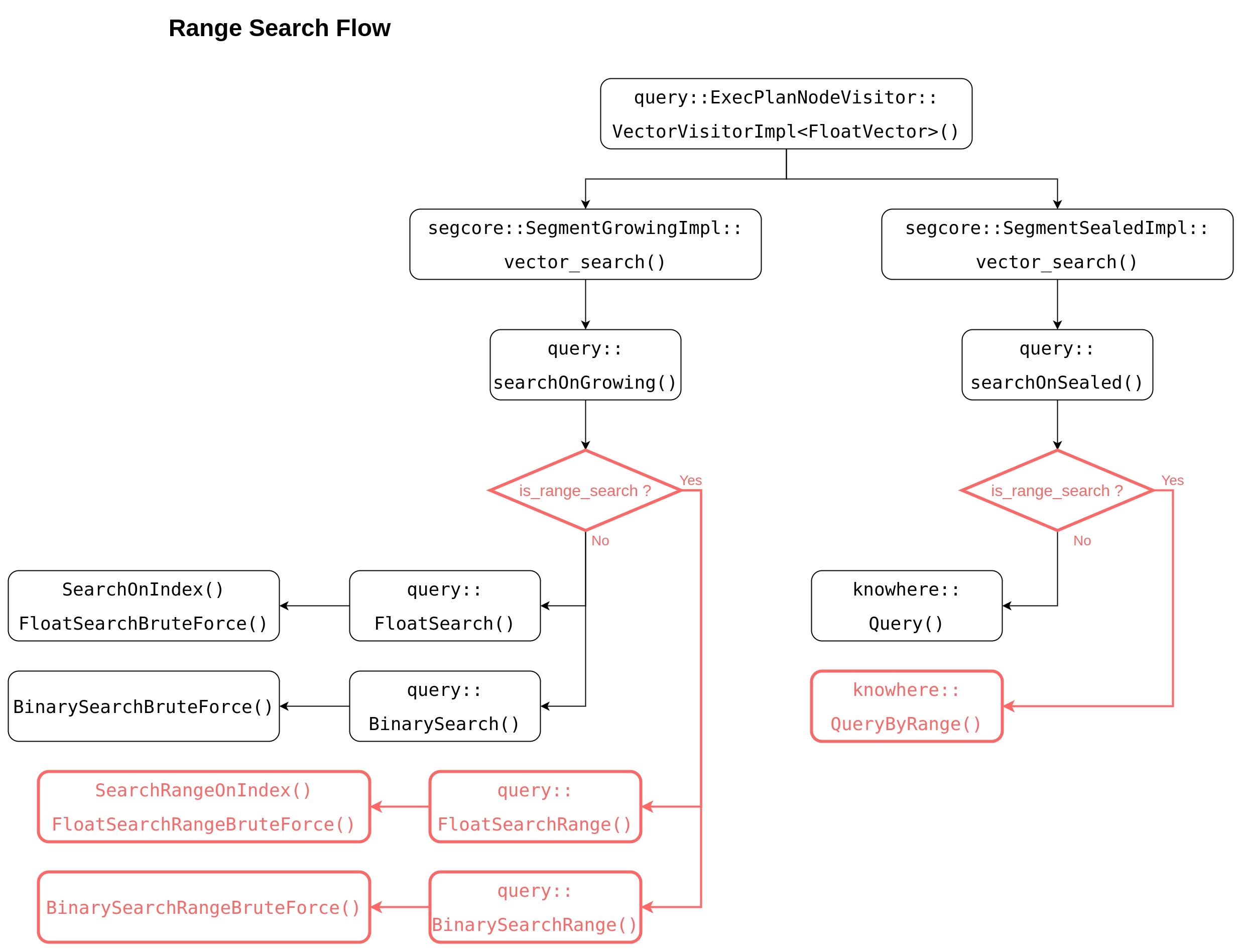

The following figure shows the call stack diagram of vector query in segcore, and the BLACK part shows the functions that have been implemented.

For sealed segment, to realize the range search function, knowhere needs to provide the interface QueryByRange();

For growing segment, because there is no index created, you can't use knowhere IDMAP to realize the brute force search function. You can only re-realize the full set of logic of brute force search by yourself. To implement range search, you need to implement the function shown in the RED part.

Another solution is that Knowhere provides a new IDMAP index, which does not need to insert vector data, but only needs to specify the external memory address of vector data. Growing segment can temporarily generate this kind of index during query, and then call the Query() & QueryByRange() interface provided by the IDMAP, and the index will be destroyed immediately after it is used up.

There is another MEP 34 -- IDMAP/BinaryIDMAP Enhancement describing this proposal.

Result Handling

Several data structure related with query result need be enhanced to support range search.

SubSearchResult

It is used to store each chunk's query result in a segment.

Need add a new field "lims_" and a new method "merge_range()". Because the behavior of merge between Query() and QueryByRange() differs a lot. For Query(), after merging two "nq * topk" results, we still get "nq * topk" result. But for QueryByRange(), when merge A (with "n0" results) and B (with "n1" results), we get "n0 + n1" results.

| Code Block | ||

|---|---|---|

| ||

class SubSearchResult {

... ...

void

merge(const SubSearchResult& sub_result);

void

merge_range(const SubSearchResult& sub_result); // <==== new added

private:

... ...

std::vector<int64_t> seg_offsets_;

std::vector<float> distances_;

std::vector<size_t> lims_; // <==== new added

} |

SearchResult

It is used to store the final result of one segment search. we need add a new field "lims".

| Code Block | ||

|---|---|---|

| ||

struct SearchResult {

... ...

public:

... ...

std::vector<float> distances_;

std::vector<int64_t> seg_offsets_;

std::vector<size_t> lims_; // <==== new added

... ...

}; |

ReduceHelper

It's used to reduce all search results from all segments in a query node.

reduceSearchResultData in Proxy

It's used to reduce all search results from multiple query nodes.

Need discuss the interface between Pagination and range search.

Compatibility, Deprecation, and Migration Plan(optional)

- What impact (if any) will there be on existing users?

- If we are changing behaviors how will we phase out the older behavior?

- If we need special migration tools, describe them here.

- When will we remove the existing behavior?

Test Plan(required)

We can use Query() testcases to test QueryByRange() with little case change.

The main difference between Query() and QueryByRange() is, Query() using 'topk' while QueryByRange() using 'radius'.

Do following:

- do Query() firstly and get 'nq * topk' results

- for L2, find the max distance among "nq * topk' results, let 'radius = (max distance)^(1/2)'

- for IP, find the min distance among "nq * topk' results, let 'radius = min distance'

- for other binary metric types, find the max distance among "nq * topk' results, let 'radius = max distance'

- do QueryByRange() with above 'radius', the result will be a superset of result of Query()

For index HNSW, we should set "range_k" before call QueryByRange().

...

.

You can find them in NAS:

- test/milvus/ann_hdf5/binary/sift-4096-hamming-range.hdf5

- base dataset and query dataset are identical with sift1m

- radius = 291.0

- result length for each nq is different

- total result num 1,063,078

- test/milvus/ann_hdf5/sift-128-euclidean-range.hdf5

- base dataset and query dataset are identical with sift1m

- radius = 186.0 * 186.0

- result length for each nq is different

- total result num 1,054,377

- test/milvus/ann_hdf5/sift-128-euclidean-range-multi.hdf5

...

- base dataset and query dataset are identical with sift1m

- ground truth IDs and Distances are identical with sift1m

- each nq's radius is set to the last ground truth distance

- result length for each nq is 100

- total result num 1,000,000

- test/milvus/ann_hdf5/glove-200-angular-range.hdf5

- base dataset and query dataset are identical with glove200

- radius = 0.52

- result length for each nq is different

- total result num 1,060,888

Segcore

- Add new range search unittest

Milvus

- use sift1M/glove200 dataset to test range search (radius = max_float / -1.0), we expect to get identical results as search

Rejected Alternatives(optional)

The previous proposal of this MEP is let range search return all results with distances better than a "radius".

The project implementation of the previous proposal is too complicated to achieve comparing with current proposal.

Adv.

- Get all range search result in one call

Cons:

- Need implement Merge operation for chunk, segment, query node and proxy

- Memory explosion caused by Merge

- Many API modification caused by invalid topk parameter

Others

Because the result length of range search from knowhere is variable, knowhere plan to afford another API to return the range search result count for each NQ.

If there is user request to get all range search result in one call, query node team will afford another solution to save range search output of knowhere to S3 directlyIf there are alternative ways of accomplishing the same thing, what were they? The purpose of this section is to motivate why the design is the way it is and not some other ways.

References(optional)

...