...

- Open Business and Artificial Intelligence Connectivity (OBAIC) borrows the concept from Open Database Connectivity (ODBC), which is an interface that makes it possible for applications to access data from a variety of database management systems (DBMSs). The aim of OBAIC is to define an interface allowing BI tools to access machine learning models from a variety of AI platforms - “AI ODBC for BI”

- Through OBAIC, BI vendors can connect to any AI platform freely without concerning about the underlying implementation and how does the AI platform train the model or infer the result. It's just like what we used to have for database with ODBC - the caller doesn't need to concern about how the database store the data and execute the query.

- The committee has decided this standard will only define the REST APIs protocol of how AI and BI communicate. The design or the actual implementation of OBAIC, such as whether this should be Server VS Server-less VS Docker, will leave it up to the vendor to provide, or if this protocol grows to another open-sourced project to provide such implementation.

- There are 3 key aspects when designing this standard

- BI - what specific call do I need this standard to provide so that I can better leverage any underlying AI/ML platform?

- AI - what should be the common denominator an AI platform should provide to support this standard?

- Data - Shall data be moved around in the communication between AI and BI (passed by value) or keep the data in the same location (passed by reference)?

All of the REST APIs call presented below use bearer tokens for authorization. The {prefix} of each API is configurable in the hosted servers. This protocol is inspired by Delta Sharing.

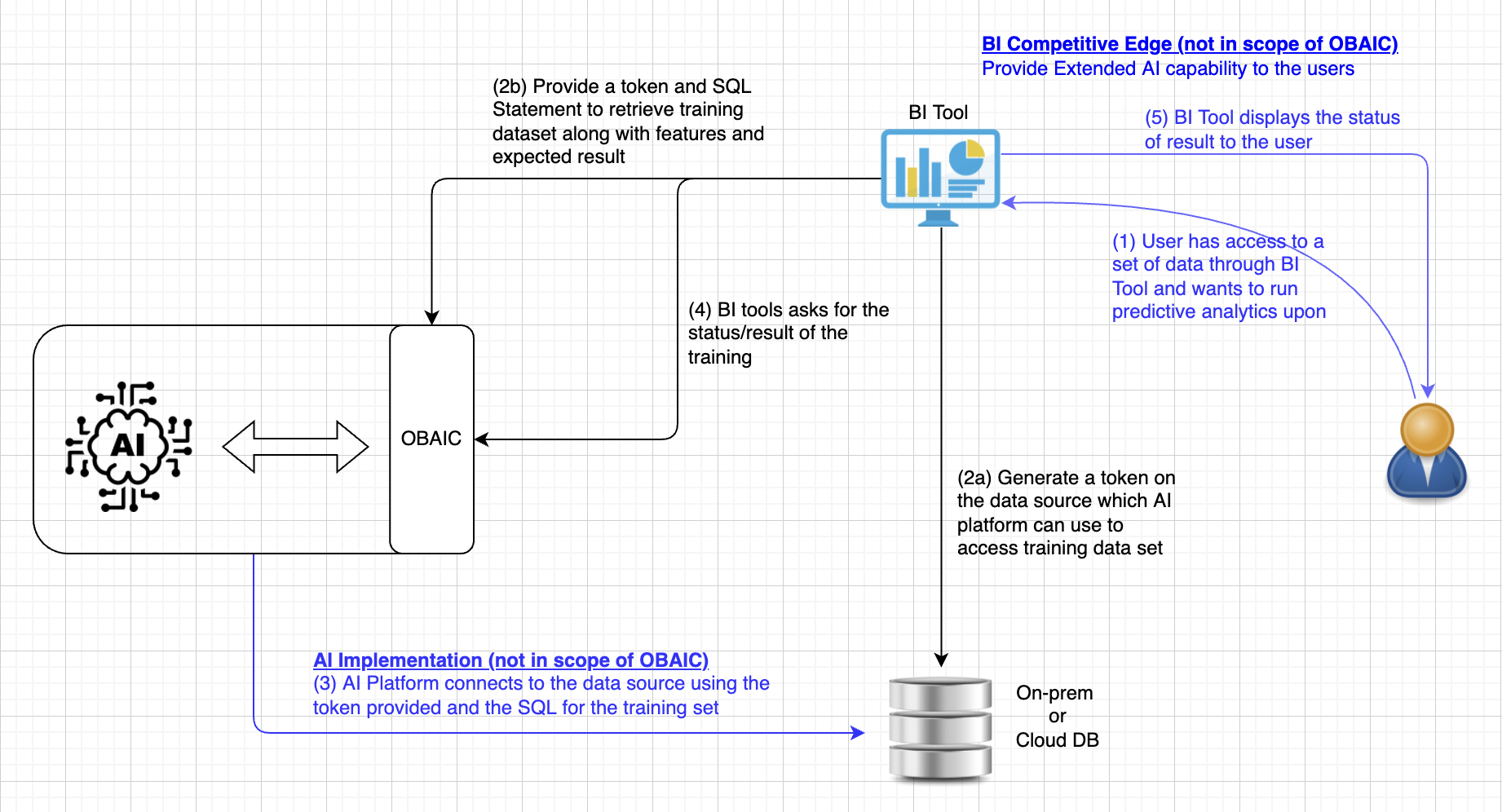

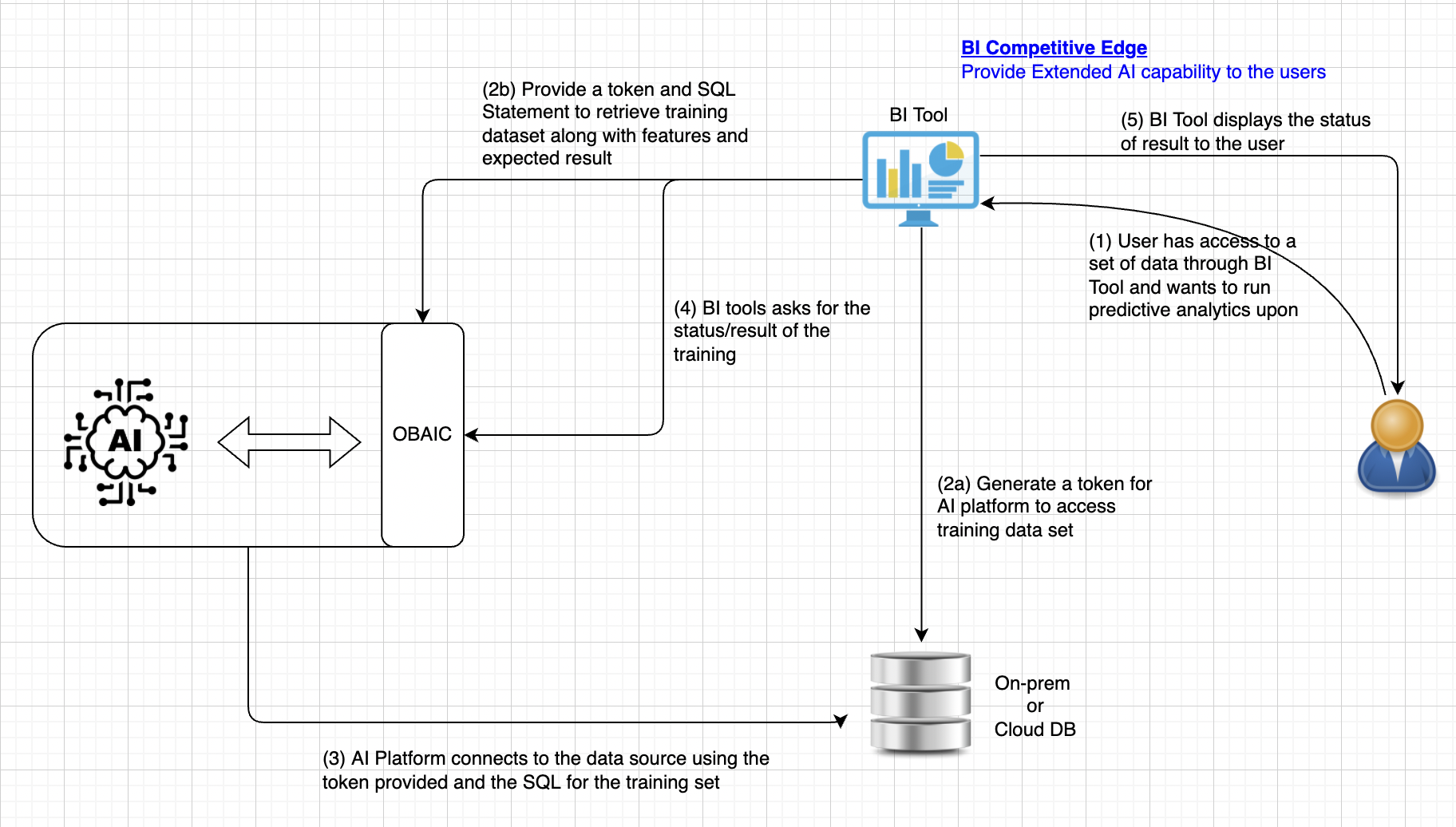

High Level Protocol - Training

- Not in the scope of OBAIC - User analyzes data using BI Tools and found out that predictive analytics on those data set would be valuable. BI tool, on behalf of the user, requests AI platform through OBAIC, to train/prepare a model that accepts features of a certain type (numeric, categorical, text, etc.) by providing a token (This step is the traditional step when a user interacts with BI.

(a) Obtain a token a token with permission associated to the user

requesting this) to allow accessingmaking the request. This token is going to pass to AI allowing the access to the training data with a SQL statement running against the datastore.

- BI tool polls for the status or retrieve the training result. If the training is still in progress, the status will be returned. When training is completed, results and performance of the model will be returned.

High Level Protocol - Inference

REST APIs

All of the REST APIs call presented below use bearer tokens for authorization. The {prefix} of each API is configurable in the hosted servers. This protocol is inspired by Delta Sharing.

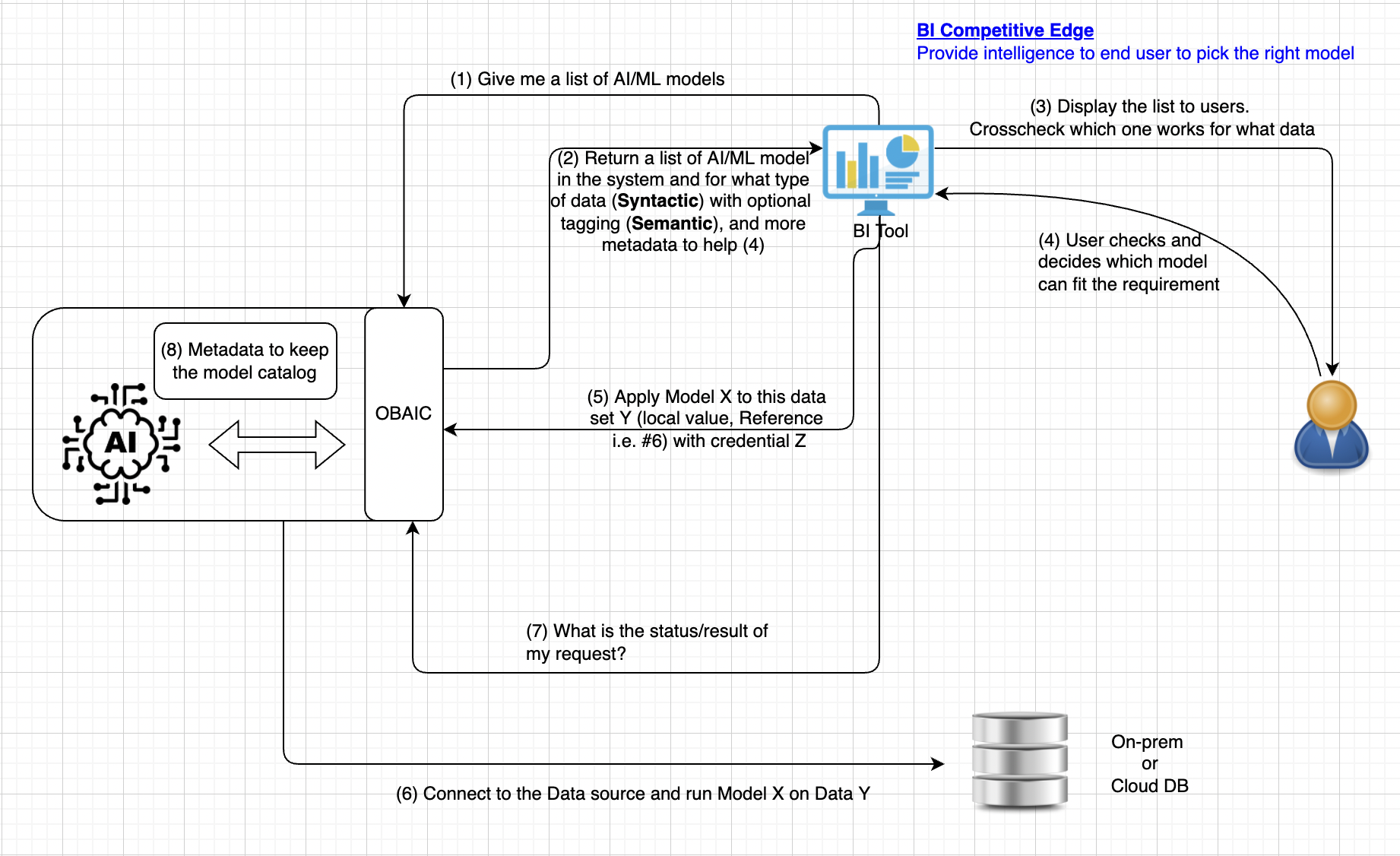

List Models - Step (1)

...

| title | API to list models accessible to the recipient |

|---|

...

GET

...

Authorization: Bearer {token}

...

{prefix}/models/{model}

...

maxResults (type: Int32, optional): The maximum number of results per page that should be returned. If the number of available results is larger than maxResult, the response will provide a nextPageToken that can be used to get the next page of results in subsequent list requests. The server may return fewer than maxResults items even if there are more available. The client should check nextPageToken in the response to determine if there are more available. Must be non-negative. 0 will return no results but nextPageToken may be populated.

pageToken (type: String, optional): Specifies a page token to use. Set pageToken to the nextPageToken returned by a previous list request to get the next page of results. nextPageToken will not be returned in a response if there are no more results available.

...

| title | 200: Models are returned successfully |

|---|

...

(b) BI tool, on behalf of the user, requests AI platform through OBAIC, to train/prepare a model that accepts features of a certain type (numeric, categorical, text, etc.)

Expand title API to train model using provided dataset Model configuration is based on configs from the open-source Ludwig project. At a minimum, we should be able to define inputs and outputs in a fairly standard way. Other model configuration parameters are subsumed by the options field.

The data stanza provides a bearer token allowing the ML provider to access the required data table(s) for training. The provided SQL query indicates how the training data should be extracted from the source.

Don't be confused with the Bearer token which is used to authenticate with OBAIC, and the dbToken which is created in 2(a) and AI platform will use that to access the data source for training

HTTP Request Value Method POSTHeader Authorization: Bearer {token}URL {prefix}/models/Query Parameters {"dbToken": "D41C4A382C27A4B5DF824E2D4F148";

"inputs":[

{

"name":"customerAge",

"type":"numeric"

},

{

"name":"activeInLastMonth",

"type":"binary"

}

],

"outputs":[

{

"name":"

...

canceledMembership",

"

...

type":"

...

binary"

}

],

"

...

modelOptions":

...

itemswill be an empty array when no results are found.idfield is the key to retrieved the model in the subsequent calls. Its value must be unique across the AI server and immutable through the model's lifecycle.nextPageTokenwill be missing when there are no additional results

{“providerSpecificOption”: “value”},

"data":{

"sourceType":"snowflake",

"endpoint":"some/endpoint",

"bearerToken":"...",

"query":"SELECT foo FROM bar WHERE baz"

}

}Expand title Alternatively, we may also consider to support SQL-like syntax for Model Training If we go beyond just REST API, SQL-like is an alternative as the syntax is also well-known

Use BigQuery ML model creation as an example and generalizing

CREATE MODEL (

customerAge WITH ENCODING (

type=numeric

),

activeInLastMonth WITH ENCODING (

type=binary

),

canceledMembership WITH DECODING (

type=binary

)

)

FROM myData (

sourceType=snowflake,

endpoint="some/endpoint",

bearerToken=<...>,

)AS (SELECT foo FROM BAR)

WITH OPTIONS ();Expand title 200: Training is started and the corresponding ID is return for future reference HTTP Response Value Header Content-Type: application/json; charset=utf-8Body {"modelID": "d677b054-2cd4-4711-959b-971af0081a73"}modelIDis generated and returned to the caller if training is started successfully. This will be used to check the status of the training, or for future Inference (see Inference section below)

- Not in the scope of OBAIC - AI Platform provider the implementation to fulfill the request by connecting to the datasource with the provided token and the set of training data specified in SQL. This step is up to how the AI platform interacts with the data source to performance the training.

BI tool polls for the status or retrieve the training result. If the training is still in progress, the status will be returned. When training is completed, results and performance of the model will be returned.

Expand title API to get model status HTTP Request Value Method GETHeader Authorization: Bearer {token}URL {prefix}/modelStatus?modelID=Query Parameters modelID (type: String): The modelID returned from previous OBAIC call either from training or list of Models.

Expand title 200: Status of the Model returned HTTP Response Value Header Content-Type: application/json; charset=utf-8Body {"modelID": "d677b054-2cd4-4711-959b-971af0081a73","status": "training","progress": "80",}modelIDis same ID provided in the requeststatuscan be training | inferencing | readyprogressis the estimated progress of the current status

- Not in the scope of OBAIC - BI tool presents the result to the user in their own way, which is the "secret sauce" and unique to each other.

High Level Protocol - Inference

List Models - Step (1)

| Expand | ||

|---|---|---|

| ||

Example:

| Code Block | ||||||||

|---|---|---|---|---|---|---|---|---|

| ||||||||

{

"models": [

{

"name": "Model 1",

"id": "6d4b571a-80ca-41ef-bc67-b158f4352ad8"

},

{

"name": "Model 2",

"id": "70d9ab9d-9a64-49a8-be4d-d3a678b4ab16"

},

{

"name": "Model 3",

"id": "99914a97-5d2e-4b9f-b81a-1d43c9409162"

},

{

"name": "Model 4",

"id": "8295bfda-7901-43e8-9d31-81fd1c3210ee"

},

{

"name": "Model 5",

"id": "0693c224-3a3f-4fe7-bbbe-c70f93d15f12"

}

],

"nextPageToken": "3xXc4ZAsqZQwgejt"

} |

Get Model - Step (2)

| Expand | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ||||||||||||||

|

| Expand | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| |||||||||

| |||||||||

| Expand | |||||||||

| |||||||||

|

Example:

|

Example:

| Code Block | ||||||||

|---|---|---|---|---|---|---|---|---|

| ||||||||

{

"models": [

{

"name": "Model 1",

"id": "6d4b571a-80ca-41ef-bc67-b158f4352ad8"

},

{

"name": "Model 2",

"id": "70d9ab9d-9a64-49a8-be4d-d3a678b4ab16"

},

{

"name": "Model 3",

"id": "99914a97-5d2e-4b9f-b81a-1d43c9409162"

},

{

"name": "Model 4",

"id": "8295bfda-7901-43e8-9d31-81fd1c3210ee"

},

{

"name": "Model 5",

"id": "0693c224-3a3f-4fe7-bbbe-c70f93d15f12"

}

],

"nextPageToken": "3xXc4ZAsqZQwgejt"

} |

Get Model - Step (2)

| Expand | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ||||||||||

|

| Expand | ||||||

|---|---|---|---|---|---|---|

| ||||||

|

Example:

| Code Block | ||||||||

|---|---|---|---|---|---|---|---|---|

| ||||||||

{

"id": "6d4b571a-80ca-41ef-bc67-b158f4352ad8",

"name": "Model 1",

"revision": 3,

"format": {

"name": "PMML",

"version": "4.3"

},

"algorithm": "Neural Network",

"tags": [

"Anomaly detection",

"Banking"

], | ||||||||

| Code Block | ||||||||

| ||||||||

{ "id": "6d4b571a-80ca-41ef-bc67-b158f4352ad8", "name": "Model 1", "revision": 3, "format": { "name": "PMML", "version": "4.3" }, "algorithm": "Neural Network", "tags": [ "Anomaly detection", "Banking" ], "dependency", "", "creator": "John Doe", "description": "This is a predictive model, refer to {input} and {output} for detailed format of each field, such as value range of a field, as well as possible predictions the model will gave. You may also refer to the example data here.", "input": { "fields": [ { "name": "Account ID", "opType": "categorical", "dataType": "string", "taxonomy": "ID", "example": "account abc-001", "allowMissing": false, "description": "unique value" }, { "name": "Account Balance", "opType": "continuous", "dataType": "double", "taxonomy": "currency", "example": "1,378,560.00", "allowMissing": true, "description": "Minimum: 0, Maximum: 999,999,999.00" }, ], "ref": "http://dmg.org/pmml/v4-3/pmml-4-3.xsd" } "output": { "fields": [ { "name": "Churn", "opType": "continuous", "dataType": "string", "dependency", "", "taxonomycreator": "IDJohn Doe", "exampledescription": "0.67", "allowMissing": false, "description": "the possibility of the account stop doing business with a company over 6 months" }This is a predictive model, refer to {input} and {output} for detailed format of each field, such as value range of a field, as well as possible predictions the model will gave. You may also refer to the example data here.", "input": { "fields": [ ], { "refname": "http://dmg.org/pmml/v4-3/pmml-4-3.xsd"Account ID", "opType": "categorical", "dataType": "string", "taxonomy": "ID", "example": "account abc-001", "allowMissing": false, } "performancedescription": { "unique value" }, { "metricname": "accuracyAccount Balance", "valueopType": 0.85"continuous", }, "ratingdataType": 5"double", "urltaxonomy": "uri://link_to_the_model" } |

Error - Apply to all API calls above

...

| title | 400: The request is malformed |

|---|

...

| title | 401: The request is unauthenticated. The bearer token is missing or incorrect |

|---|

...

| title | 403: The request is forbidden from being fulfilled |

|---|

...

| title | 500: The request is not handled correctly due to a server error |

|---|

...

Nest Step

- Finalize Logo

- Determine what other AI framework can be supported by OBAIC besides ONNX and PMML

Potential Future Enhancement

- Formally design JSON in http://json-schema.org/ so that future development can validate the JSON structure

- Define data pipeline to transform data before running

- Define containerized model so that prediction can run in BI instead of in AI

- Define format of nextPageToken

- Define different types of

errorCodeandmessagefor each API call

FAQ

- Why should AI share model to BI?

- The setting of OBAIC assumes an organization owns both the BI Tool(s) and AI platform(s). However, they are 2 (or more) discrete entities and may not have a good way to integrate. Hence OBAIC comes in to connect the dots.

- Who owns the model and data?

- The AI platform owns the model but share with BI tools through OBAIC. The data is owned by the business but BI has been authorized to use it and re-share this to AI for training and inference.

- How do you deal with Security?

- Call will be handled by HTTPS protocol and authorized by bearer token standard

References

- Tableau version of OBAIC https://tableau.github.io/analytics-extensions-api/docs/ae_example_tabpy.html

- Qlik version of OBAIC: https://github.com/qlik-oss/server-side-extension

- Delta Sharing: https://github.com/delta-io/delta-sharing/blob/main/PROTOCOL.md#delta-sharing-protocol

Authors

...

Name

...

Affiliation

...

Train a New Model

...

function TrainModel(inputs, outputs, modelOptions, dataConfig) -> UUID

currency",

"example": "1,378,560.00",

"allowMissing": true,

"description": "Minimum: 0, Maximum: 999,999,999.00"

},

],

"ref": "http://dmg.org/pmml/v4-3/pmml-4-3.xsd"

}

"output": {

"fields": [

{

"name": "Churn",

"opType": "continuous",

"dataType": "string",

"taxonomy": "ID",

"example": "0.67",

"allowMissing": false,

"description": "the possibility of the account stop doing business with a company over 6 months"

}

],

"ref": "http://dmg.org/pmml/v4-3/pmml-4-3.xsd"

}

"performance": {

"metric": "accuracy",

"value": 0.85

},

"rating": 5,

"url": "uri://link_to_the_model"

} |

Error - Apply to all API calls above

| Expand | ||||||

|---|---|---|---|---|---|---|

| ||||||

|

| Expand | ||||||

|---|---|---|---|---|---|---|

| ||||||

|

| Expand | ||||||

|---|---|---|---|---|---|---|

| ||||||

|

| Expand | ||||||

|---|---|---|---|---|---|---|

| ||||||

|

Nest Step

- Finalize Logo

- Determine what other AI framework can be supported by OBAIC besides ONNX and PMML

Potential Future Enhancement

- Formally design JSON in http://json-schema.org/ so that future development can validate the JSON structure

- Define data pipeline to transform data before running

- Define containerized model so that prediction can run in BI instead of in AI

- Define format of nextPageToken

- Define different types of

errorCodeandmessagefor each API call

FAQ

- Why should AI share model to BI?

- The setting of OBAIC assumes an organization owns both the BI Tool(s) and AI platform(s). However, they are 2 (or more) discrete entities and may not have a good way to integrate. Hence OBAIC comes in to connect the dots.

- Who owns the model and data?

- The AI platform owns the model but share with BI tools through OBAIC. The data is owned by the business but BI has been authorized to use it and re-share this to AI for training and inference.

- How do you deal with Security?

- Call will be handled by HTTPS protocol and authorized by bearer token standard

References

- Tableau version of OBAIC https://tableau.github.io/analytics-extensions-api/docs/ae_example_tabpy.html

- Qlik version of OBAIC: https://github.com/qlik-oss/server-side-extension

- Delta Sharing: https://github.com/delta-io/delta-sharing/blob/main/PROTOCOL.md#delta-sharing-protocol

Authors

Name | Affiliation |

|---|---|

| Cupid Chan | Pistevo Decision |

| Xiangxiang Meng | Redfin |

| Deepak Karuppiah | MicroStrategy |

| Nancy Rausch | SAS |

| Dalton Ruer | Qlik |

| Sachin Sinha | Microsoft |

| Yi Shao | IBM |

| Jeffrey Tang | Predibaes |

| Lingyan Yin | Salesforce |

Example params:

...

{

"inputs":[

{

"name":"customerAge",

"type":"numeric"

},

{

"name":"activeInLastMonth",

"type":"binary"

}

],

"outputs":[

{

"name":"canceledMembership",

"type":"binary"

}

],

"modelOptions": {

“providerSpecificOption”: “value”

},

"data":{

"sourceType":"snowflake",

"endpoint":"some/endpoint",

"bearerToken":"...",

"query":"SELECT foo FROM bar WHERE baz"

}

}

Model configuration is based on configs from the open-source Ludwig project. At a minimum, we should be able to define inputs and outputs in a fairly standard way. Other model configuration parameters are subsumed by the options field.

The data stanza provides a bearer token allowing the ML provider to access the required data table(s) for training. The provided SQL query indicates how the training data should be extracted from the source.

Example response:

{ |

Consider also a fully SQL-like interface taking BigQuery ML model creation as an example and generalizing:

CREATE MODEL ( AS (SELECT foo FROM BAR) |

List Models

function ListModels() -> List[UUID, Status] |

Example response:

"models":[

{ "modelUUID": "abcdef0123", "status": "deployed" },

{ "modelUUID": "1234567890", "status": "training" }

]

}

...

Show Model Config

function GetModelConfig(UUID) -> Config |

...